|

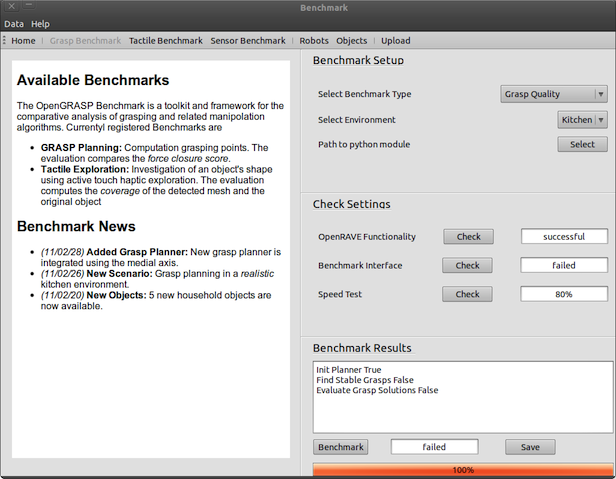

Welcome to the OpenGRASP Benchmark Suite, a new software environment for the comparative evaluation of algorithms for grasping and dexterous manipulation. The key aspect of this development is to provide a tool that allows the reproduction of well-defined experiments in real-life scenarios in every laboratory and, hence, benchmarks that pave the way for objective comparison and competition in the field of grasping. In order to achieve this, experiments running in the OpenGRASP Benchmark Suite are performed on the sound open-source software platform that is used throughout OpenGRASP-Project. The benchmark environment comes with an extendable structure in order to be able to include a wider range of benchmarks defined by robotics researchers. It is fully integrated into the OpenGRASP Toolkit, and also builds on the OpenRAVE -project and includes the grasp-specific extensions and a tool for the creation and integration of new robot models provided by OpenGRASP.

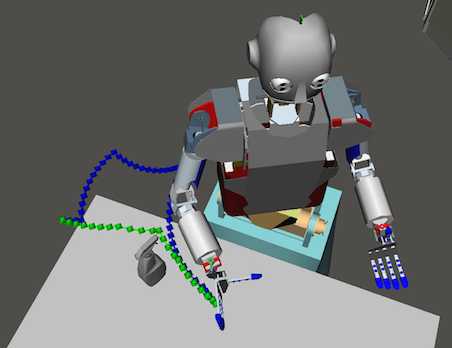

Currently, we provide ready-to use benchmarks for grasp and motion planning which are included as case studies, as well as a library of domestic everyday objects models, and a real-life scenario that features a humanoid robot acting in a kitchen (already included in the downloadable package).

Contact stefan.ulbrich at kit.edu

There are two options to get the Benchmark suite

Note, however, that the suite currently runs on linux only.

First get the sources

$> svn co https://opengrasp.svn.sourceforge.net/svnroot/opengrasp/Benchmark/ Benchmark

Then build the installation package with

$> cd BenchmarkSuite

$> ./createInstaller.py -p <your_directory_of_choice>

This will place a file Benchmarkgui.tar.gz in <your_directory_of_choice>.

Retrieve the installation package by one of the procedures mentioned above, extrackt the package and run the install script:

$> tar xvzf Benchmark*.tar.gz

$> cd BenchmarkGui

$> ./install.py -l

The -l option installs the software locally into a folder localinstall if no superuser rights are available. The script also obtains and installs all necessary python dependencies needed to run the software. Finally, the software can be started by running:

localinstall/ $> ./Benchmark.py

The OpenGRASP Benchmarking Suite distinguishes between uses-cases, benchmarks and challenges:

Note: The OpenGRASP Benchmark Suite is still a work in progress, and it relies heavily on input and testing from the robotics community. Please feel free to contact the developers if you have questions, have found a bug or for suggestions of any type.

You can browse the programming interface documentation (API) online or build it yourself when you downloaded the sources via svn:

$> cd BenchmarkGUI/docs

$> make

This builds the API documentation that also includes tutorial for building benchmarks and use-cases.

In this section, we show how to integrate SIMOX - an open-source C++ library developed at the Humanoids and Intelligence Systems lab in Karlsruhe, Germany - into a use-case that runs the motion planning benchmark. It uses Boost::Python to wrap the library code and make it accessible in the benchmark. In the video section, you can see a video of the running benchmark in the environment.

The sources for this example are in the source distribution of the software an can be downloaded separately by:

$> svn co https://opengrasp.svn.sourceforge.net/svnroot/opengrasp/Benchmark/examples examples

Under Construction The rest of the tutorial is currently beeing written and will be published here soon.